2026 embedded vision is shifting from specs to system survivability: fanless thermal budgets, rugged locking connectors, low-light SNR quality, mainstream global shutter, edge understanding, and privacy-first retrofits for legacy deployments.

Date: January 3th, 2026 shenzhen, China Source: shenzhen novel electronics limited

Embedded Vision Trends 2026: Fanless, Reliable, Privacy-First Edge AI

By 2026, embedded vision competitiveness is defined less by pixels or TOPS and more by system survivability—thermal behavior, mechanical reliability, and compliance. The winners will be those who deliver stable edge understanding under real-world constraints

As embedded vision moves into 2026, the industry is entering a decisive transition. Competitive advantage is no longer defined by isolated specifications such as resolution, frame rate, or peak AI TOPS. Instead, system survivability—how well a vision system operates under real-world constraints—is becoming the dominant metric.

Across medical devices, robotics, industrial automation, and privacy-regulated digital signage, embedded vision systems are now evaluated by their thermal stability, power efficiency, mechanical reliability, and regulatory compliance, not just image quality.

One of the strongest signals shaping embedded vision trends in 2026 is the rise of fanless medical imaging systems. The “towerless” movement, illustrated by compact platforms from Outlook Surgical, reflects a broader shift toward quieter, sealed, and mobile medical devices.

In these architectures, modern SoCs and NPUs already consume most of the available thermal budget. Cameras are no longer peripheral components—they must operate within extremely tight thermal envelopes. Excess heat from a vision module can trigger throttling, compromise reliability, or prevent certification under standards such as IEC 60601.

As a result, fanless embedded vision design is becoming a strategic requirement rather than a niche feature.

In outdoor robots, industrial drones, and autonomous mobile robots (AMRs), mechanical stability has become inseparable from system safety. While USB 3.2 Gen 2 offers sufficient bandwidth for most edge vision systems, standard USB connectors were never designed for sustained vibration.

In robotics and industrial automation, a momentary disconnect is not a user inconvenience—it is a potential system failure. This is driving a shift toward locking connectors, board-to-board camera integration, and vibration-resistant interfaces.

For production systems, eliminating loose cables and relying on mechanically secured camera connections is increasingly considered best practice for industrial embedded vision systems.

Low-light imaging has entered a new phase. Technologies such as Sony STARVIS and OmniVision’s Nyxel platform are not simply improving brightness—they are redefining the quality of data delivered to AI inference engines.

By 2026, it is widely recognized that AI inference accuracy depends on signal-to-noise ratio (SNR) more than raw pixel counts. In logistics robots, night-time inspection systems, and outdoor edge vision platforms, noisy or motion-blurred images cause sharp drops in detection accuracy, regardless of available compute.

Clean low-light input data has become a foundational requirement for reliable edge AI vision systems.

Another clear trend is the mainstream adoption of global shutter imaging. As sensor vendors introduce more compact and power-efficient designs, global shutter is no longer limited to high-end machine vision.

For robotics, SLAM, and high-speed inspection, rolling shutter distortion undermines geometric consistency and mapping accuracy. As a result, global shutter cameras for robotics are rapidly becoming the default rather than the exception.

The industry consensus is converging: motion integrity at the sensor level is essential for AI-driven perception.

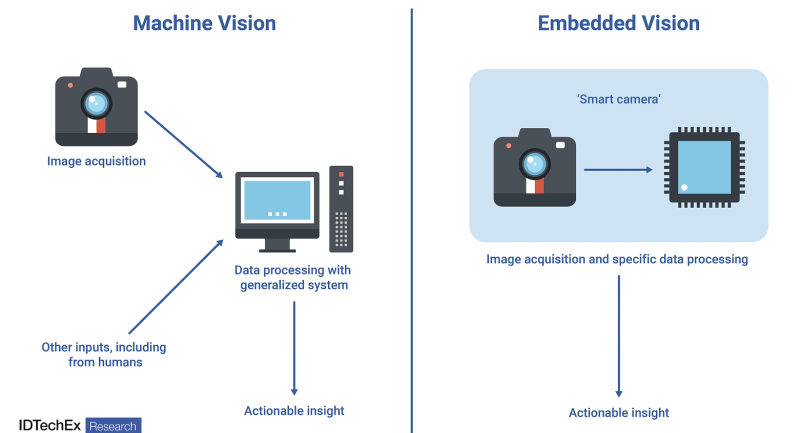

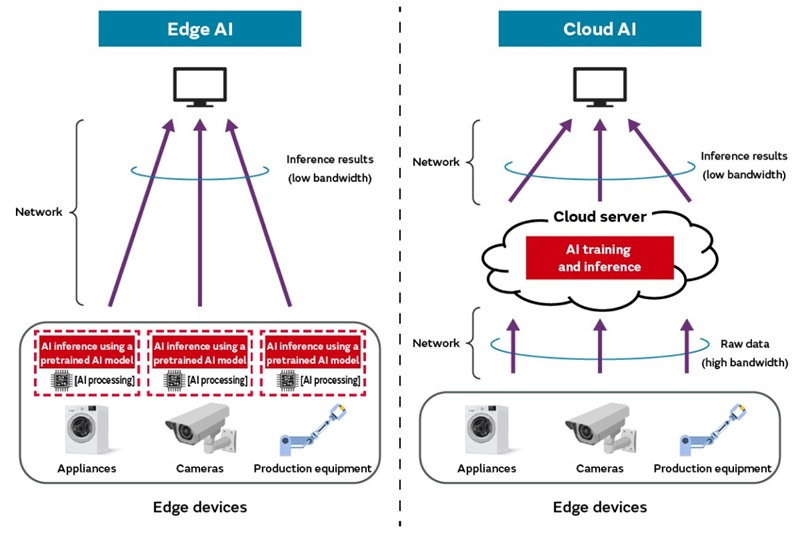

Perhaps the most structural change is the shift from “seeing” to “understanding” at the edge. Vision systems are no longer expected to stream raw video continuously. Instead, they are designed to perform edge analytics and output structured metadata—counts, events, classifications—in real time.

This transition is driven by latency constraints, bandwidth efficiency, and increasingly strict privacy regulations. In many deployments, particularly in Europe, transmitting identifiable video data off-device is no longer acceptable.

As a result, cameras are evolving into low-power USB camera modules that serve as intelligent perception nodes within edge AI architectures.

In digital signage, growth is centered on retrofitting existing displays rather than installing new screens. Advertisers demand audience verification and proof-of-display metrics, while regulators enforce privacy-by-design requirements under GDPR and the EU AI Act.

This has accelerated demand for privacy-first embedded vision solutions that process data locally and output anonymized results—such as audience counts and dwell time—without transmitting video streams.

For pDOOH retrofit projects, the winning architectures transform legacy screens into compliant, data-aware endpoints at minimal cost.

By 2026, embedded vision success will be determined less by peak specifications and more by system discipline. Thermal behavior, mechanical reliability, low-light data quality, global shutter integrity, edge understanding, and privacy compliance are now first-order design parameters.

The next generation of embedded vision systems will be defined not by how well they see—but by how reliably, efficiently, and responsibly they understand the world at the edge.