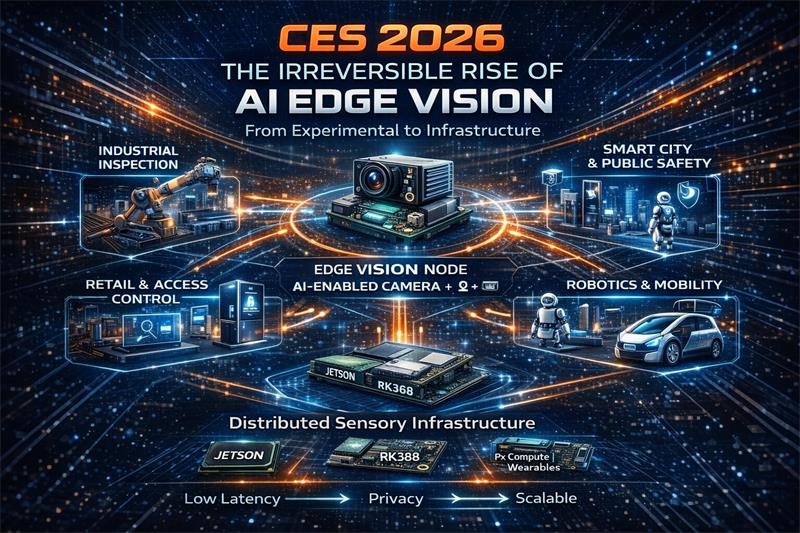

Unlike previous years where artificial intelligence was framed as a cloud-first computing paradigm, CES 2026 demonstrated a structural shift: vision-enabled edge AI is moving from experimental capability to cross-industry infrastructure.

The shift is visible not only in new product announcements, but also in supply chain maturity, developer ecosystem behavior, cost curves and deployment patterns across the physical world.

Across multiple domains—industrial, fleets, retail, smart buildings, healthcare, logistics, robotics and consumer devices—cameras equipped with on-device AI inference have transitioned from “nice-to-have features” to “baseline sensory interfaces”.

The key pattern was consistent:

cameras are no longer data capture endpoints; they are real-time perception interfaces.

Evidence from CES 2026 included:

smart city and public safety systems able to detect anomalies with weather-robust outdoor cameras rather than centralized servers

industrial inspection nodes embedded near conveyor lines and CNC machines producing structured defect data

retail and access control terminals combining RGB/IR cameras for identity and basic health screening

assisted mobility devices offering navigation for visually-impaired users using multi-camera fusion

fleet and transportation systems using low-light and weather-resilient optics for night driving and ADAS assistance

These scenarios share a common property: low latency, privacy pressure, distributed nodes and continuous perception, all of which favor edge deployment over cloud workflows.

Unlike past years dominated by conceptual demos, CES 2026 showcased commercially motivated deployments with clear budgets and operational incentives.

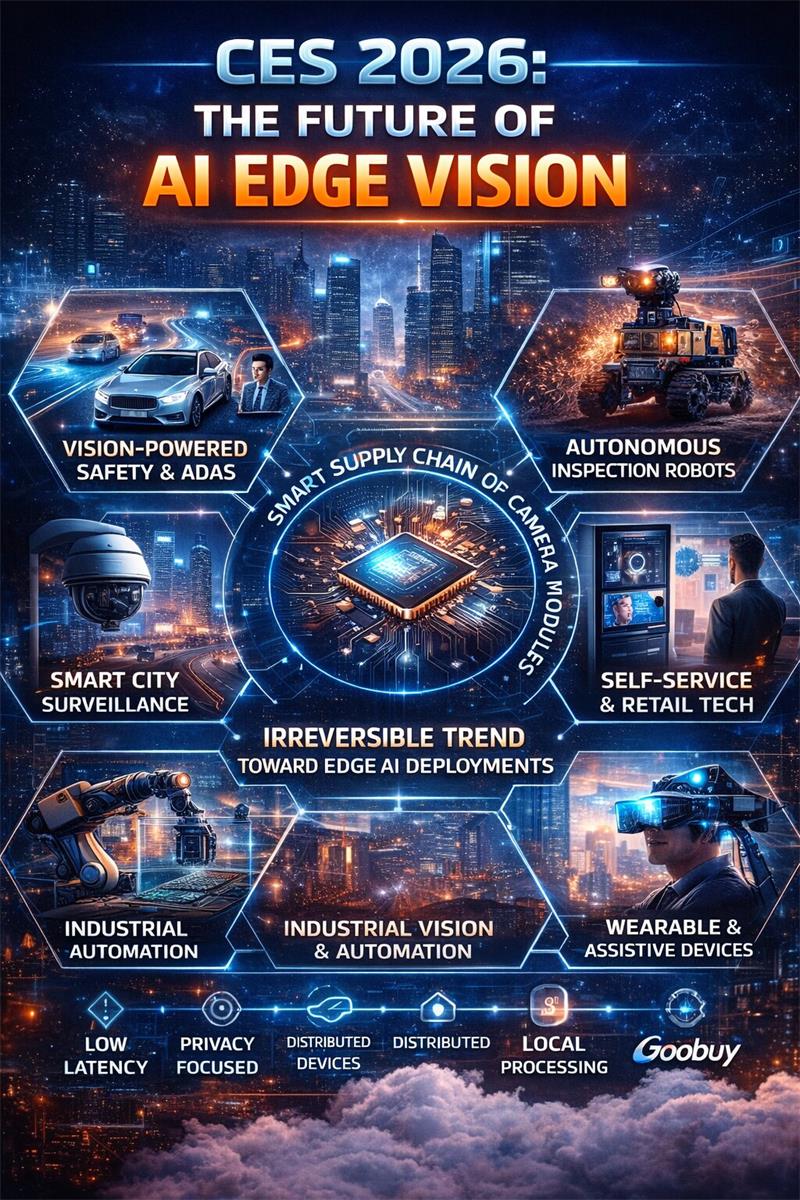

The strongest demand clusters emerged in six categories:

Safety & Compliance

Fleet ADAS cameras for hazardous weather, door and industrial zone safety systems, environmental anomaly detection and 24/7 monitoring robots.

Inspection & Asset Monitoring

Vision-enabled robots for infrastructure inspection, mining, power grids and oil & gas assets with autonomous defect detection.

Smart City & Public Services

Distributed surveillance nodes that process events locally and send only structured data upstream for triage and archiving.

Retail & Access Control Terminals

Biometric & health-screening terminals combining RGB + IR + structured illumination for building entry and frictionless retail.

Assisted Robotics & Wearables

Mobility aids for visually impaired users, home care robots and spatial computing devices that interpret environment in real time.

Consumer & SME Security

4K multi-spectrum security cameras combining optical + infrared + AI inference as default configurations rather than premium options.

These segments have one important feature in common: the willingness to pay for continuous, automated perception.

OEM and module suppliers demonstrated significant maturity at three levels:

(1) Compute Silicon

Edge AI processors from multiple vendors offered 10–100+ TOPS performance within <10W power envelopes and integrated ISP pipelines for multi-camera workloads.

This category included:

AI SoCs

NPUs and inference accelerators

neural ISP pipelines

embedded AI compute boards

(2) Camera & Imaging Modules

Camera suppliers showcased USB, MIPI, GMSL and GigE vision modules with options for:

low-light STARVIS sensors

IR and RGB-IR imaging

varifocal & optical zoom

fisheye & panoramic

ToF & structured light

thermal & multi-spectrum configurations

Modules were available as:

bare board modules for integrators

enclosed variants for field use

IP-rated housings for industrial environment

extended lifecycle SKUs for OEM deployments

(3) Turnkey Devices & Systems

Robots, terminals, drones and security appliances integrated perception + inference + connectivity into deployable form factors, signaling that vendors are shifting from technical demonstrations to revenue-driven offerings.

Despite the excitement around AI, the strongest argument for edge vision at CES 2026 was economic:

Cloud video processing is expensive in bandwidth, storage and inference cost.

Human monitoring does not scale for safety, compliance or inspection workloads.

Privacy regulation increasingly restricts raw video transfer across borders or organizations.

By contrast, edge nodes can:

✔ infer locally

✔ compress semantics, not pixels

✔ transmit events, not streams

✔ reduce cloud dependence

✔ satisfy privacy and compliance constraints

✔ preserve operational continuity during outages

For decision makers, this reframes edge vision from “AI feature” into TCO optimization strategy.

A less visible but equally important signal at CES 2026 was ecosystem behavior:

chipmakers promoted developer platforms rather than chips

tools included simulation and virtual dev boards

camera vendors exposed standard interfaces and drivers

robotics vendors adopted plug-and-play perception modules

vision SDKs normalized multi-sensor fusion

integrators sold deployment and MLOps contracts, not one-time systems

This indicates the market is transitioning from PoC-centric AI toward continuous deployment AI.

Two convergence patterns stood out:

(1) Physical World Convergence

Robotics + security + retail + mobility + healthcare all converged on the same primitives:

camera → local inference → actionable event → workflow integration

(2) Hardware & Software Convergence

ISPs, NPUs, sensors, drivers and inference engines are collapsing into unified “vision stacks” shipped as OEM platforms.

From a strategic perspective, the shift now appears irreversible:

cost curves are falling

supply chains are stabilizing

ecosystems are committing

regulations are pushing on-device processing

developers are building for edge first

customers are budgeting for perpetual deployments

As vision nodes proliferate across cities, factories, retail spaces, vehicles and homes, the physical devices closest to the environment require miniaturized, reliable, customizable camera modules compatible with embedded compute platforms.

This creates sustained demand for:

micro USB cameras

low-light sensors

optical zoom modules

fisheye & panoramic modules

USB UVC compatibility

long lifecycle component availability

mechanical customization for constrained envelopes

Goobuy — Professional Micro USB Camera for AI Edge Vision

supports OEMs and integrators by supplying compact USB camera modules optimized for:

embedded AI boxes

edge computers

industrial terminals

robotics platforms

inspection devices

medical and retail kiosks

with customization options for housing, optics, connectors, cabling, firmware and lifecycle management.

CES 2026 clarified that AI edge vision is no longer an experimental field—it is becoming a foundational layer for how the physical world will be monitored, automated and governed.

For companies operating in developed markets, the strategic question is shifting from:

“What can AI do?”

to:

“How fast can we deploy distributed perception infrastructure at scale?”

The organizations that succeed will be those that treat edge vision as a platform capability rather than a set of disconnected pilots, and those that partner with suppliers capable of delivering reliable, customizable vision hardware for real-world conditions.

Technical FAQ for CES 2026

Q1: What does “AI edge vision” mean in a commercial context?

A: AI edge vision refers to the use of camera-equipped devices that perform local AI inference on images or video streams to produce structured events without sending raw data to the cloud. This enables low-latency decision-making, reduces bandwidth and storage costs, complies with privacy regulations, and supports distributed deployments across fleets, factories, cities, buildings and retail environments.

Q2: Why did CES 2026 signal a shift toward edge vision as infrastructure?

A: CES 2026 showed that edge vision is no longer experimental. The supply chain for sensors, camera modules, edge compute, ISP pipelines and developer platforms has reached commercial readiness. The strongest adoption signals came from safety, compliance, inspection, smart city, robotics, access control and retail systems—segments that treat continuous perception as operationally essential.

Q3: Which industries are deploying edge vision first and why?

A: Early deployment is strongest in industries that require continuous perception, including industrial automation, fleet operations, public safety, smart city systems, retail access control, healthcare terminals and autonomous robotics. These sectors benefit from low latency, on-device privacy, reduced human monitoring, and scalable distributed nodes.

Q4: Why is edge vision considered economically superior to cloud-based AI video processing?

A: Cloud-first video processing suffers from high bandwidth, storage and inference costs, and introduces privacy, compliance and data sovereignty barriers. Edge vision nodes process frames locally, transmit only events or metadata, and operate during network interruptions. Over time, this yields lower TCO and reduced dependency on manual supervision.

Q5: How are hardware and software ecosystems converging at the edge?

A: Chipmakers now ship AI SoCs with NPU + ISP + multi-camera pipelines. Camera vendors provide USB/MIPI/GMSL/GigE modules with long lifecycle support. Developer platforms support local inference SDKs, virtual development boards, and deployment toolchains. Robotics vendors integrate vision as default sensory input. This is forming a unified “edge vision stack” instead of fragmented pilots.

Q6: What regulatory or policy trends are accelerating edge vision adoption?

A: Privacy and data protection rules discourage raw video transmission, especially across borders or public-private boundaries. Safety and compliance regulations favor autonomous inspection and continuous monitoring. ESG frameworks encourage predictive maintenance and automated infrastructure reporting. Together, these create incentives for on-device perception and local event processing.

Q7: Why is the shift toward AI edge vision considered irreversible?

A: The shift is driven by structural forces:

declining cost curves for NPUs and sensors

supply chain maturity for camera modules

developer ecosystem standardization

cross-industry adoption

privacy and sovereignty constraints

latency-sensitive workloads

Once these dependencies establish, the deployment model becomes sticky and difficult to reverse.

Q8: What role do specialized camera modules play in the edge vision ecosystem?

A: Distributed vision nodes require miniaturized, reliable, low-power camera modules capable of interfacing with edge compute platforms over USB, MIPI, GMSL or GigE. Optics such as low-light sensors, fisheye lenses, varifocal zoom and thermal modules enable application-specific performance. Module customization—including housing, connectors and firmware—is essential for OEM scale deployments.