Physical AI is accelerating the transition from software-based intelligence to real-world autonomy, where robots, machines and infrastructure require reliable perception at the edge. USB camera modules enable rapid vision onboarding, dataset collection and early deployment for robotics, industrial automation and service systems before scaling to production interfaces.

USB Cameras for Physical AI: 20+ Edge Vision Scenarios

In the last decade, AI mostly lived in the cloud and on our screens.

In the next decade, it will live in robots, machines and infrastructure.

During CES 2026, NVIDIA CEO Jensen Huang formally introduced Physical AI as the next epoch of computing. In his keynote, he stated:“The ChatGPT moment for robotics is here. Breakthroughs in physical AI — models that understand the real world, reason and plan actions — are unlocking entirely new applications.”

NVIDIA’s own technical materials define Physical AI as: “AI that enables autonomous machines to perceive, understand, reason and perform or orchestrate complex actions in the physical world.”

This marks a major transition in the trajectory of AI.

For the last decade, AI primarily lived in the cloud and on screens: generating text, generating images, and powering digital services. In the decade ahead, AI will increasingly inhabit robots, machines, vehicles, infrastructure and industrial systems operating in warehouses, factories, hospitals, data centers, ports, retail environments and energy networks.

This shift rewires the architecture of AI: From digital inference → to real-world autonomy.

Once AI leaves the browser and enters the physical environment, one layer suddenly becomes non-optional and mission-critical:

Perception — the camera and sensor layer.

Without perception, no Physical AI system can form a world model, detect objects, plan a trajectory, anticipate risk, or execute a safe action. In real deployments, perception becomes the first bottleneck, the first failure mode and the first system that must leave the controlled lab and enter uncontrolled reality.

And at the edge — on robots, vehicles, inspection platforms and autonomous infrastructure — a significant percentage of perception workloads are powered by compact, embedded USB cameras, which provide the fastest path to real-world sensing, model validation and deployment.

Cloud AI answers questions; Physical AI has to move atoms.

A Physical AI system must:

Perceive – capture visual data from the environment

Understand – detect objects, people, surfaces, affordances

Reason & plan – decide what to do next

Act – control motors, grippers, brakes, tools

Close the loop in real time

If the first step – perception – is wrong or missing, everything above collapses. No world model, no plan, no safe action.

That is why:

Warehouse AMRs cannot rely on cloud latency

Industrial robots cannot depend on a single overhead camera

Inspection robots cannot pause to upload every frame

Humanoid robots cannot walk blindly through a factory

Edge AI + embedded vision is the only realistic architecture, and USB cameras are often the fastest way to build and iterate that vision layer.

Why use USB Cameras for Physical AI? USB cameras are the standard for Edge AI prototyping because they offer driver-free UVC compatibility with NVIDIA Jetson and Linux architectures. They allow robotics engineers to validate VSLAM and Object Detection models rapidly before migrating to mass-production interfaces like MIPI CSI-2.

A lot of Physical AI vision eventually migrates to MIPI CSI-2, GMSL or custom camera boards in mass production. But almost every serious project goes through the same phases:

Concept & feasibility – prove that a model works on real images

Prototype & pilot – mount a camera on the robot or machine, collect data, tune the pipeline

Pre-production – harden the design, optimize latency and thermal budget

Production – lock components and interfaces for long-term supply

In phases 1–3, a USB camera for edge AI has unique advantages:

Plug-and-play with Jetson, RK3588, x86 and industrial PCs

UVC standard – no custom drivers in early stages

Easy integration with Python, C++, ROS2, Isaac, OpenCV and GStreamer

Fast lens swaps and repositioning during field tests

Reusable as a lab / test rig camera even after the product moves to MIPI or GMSL

That is why a compact module like Goobuy UC-501 micro USB camera can be on the desk of:

Robotics R&D teams

AMR startups

Industrial automation integrators

Energy inspection solution providers

Smart-retail and kiosk designers

even if the final robot eventually ships with a different connector.

While this article is not a spec sheet, it is useful to anchor the discussion around three representative modules that cover most Physical AI vision needs:

Ultra-compact 15×15 mm PCB with miniature lens options

USB UVC interface for cross-platform use

Designed for tight spaces in robots and embedded boxes

Ideal for prototyping Physical AI perception on NVIDIA Jetson Orin Nano, Raspberry Pi 5, and Rockchip RK3588 platforms

Suitable for rolling-shutter applications where motion blur is acceptable

Typical strengths:

AMRs and warehouse robots where space in the front bumper or mast is limited

Inside data-center robots, kiosks, digital signage players or retail terminals

Anywhere a standard board camera or webcam simply does not fit

1MP global shutter sensor

Excellent for high-speed motion and fast object movement

Available as USB or MIPI (depending on configuration)

Avoids rolling-shutter distortion during movement, vibration or fast manipulation

Typical strengths:

Robotic arms, pick-and-place and bin-picking

Conveyor inspection lines

Docking and alignment tasks in vehicles or mobile robots

Cranes, forklifts, construction and mining assist systems

Sony Starvis sensors with starlight low-light sensitivity

Designed for dark warehouses, night-shift operations and outdoor environments

USB interface for quick integration and evaluation

Wide range of lenses, from wide-FOV navigation to telephoto inspection

Typical strengths:

Night-time logistics and warehouse robots

Energy and infrastructure inspection at dawn/dusk or indoors

Security and patrol robots

Hospital and hotel robots operating in low-light corridors

Together, these three categories cover most Physical AI vision use-cases you will see between 2026–2029.

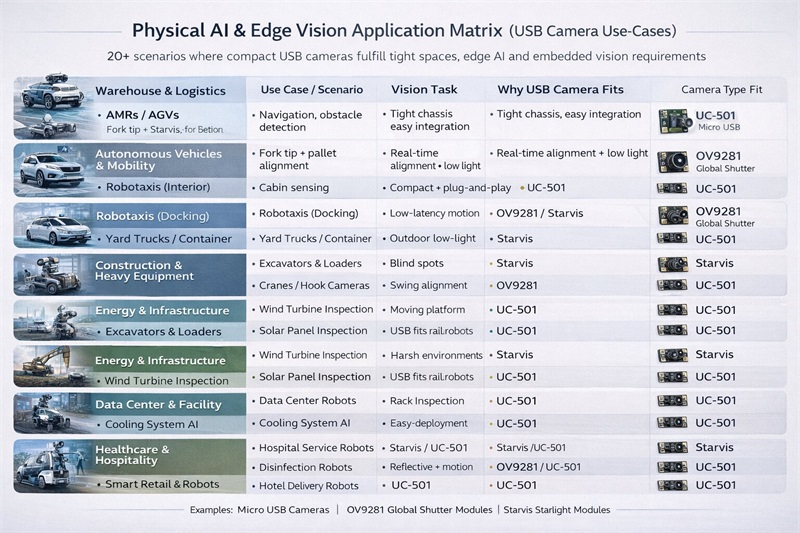

Below is a non-exhaustive matrix of industries where a USB camera for Physical AI and edge AI vision plays a central role. Many of these scenarios begin with USB modules in R&D, then migrate to MIPI / GMSL while keeping USB for testing, QA and toolchains.

I group them into eight domains.

AMRs & AGVs in Warehouses

Navigation, obstacle avoidance, pallet detection

UC-501 or Starvis USB in front bumper / mast for low-light aisles

Autonomous Forklifts & Tuggers

Fork tip vision, pallet slot alignment, load monitoring

OV9281 global shutter for motion and vibration; Starvis for low-light docks

Collaborative Robots (Cobots) on the Line

Part detection, hand-eye calibration, safety zones

OV9281 near the end-effector; UC-501 embedded in fixtures

Robotic Bin-Picking Cells

Top-down or side-mounted cameras for random bin contents

Global shutter modules to avoid blur when the gripper moves quickly

Inline Quality Inspection in Smart Factories

Defect detection, label reading, assembly verification

Starvis USB cameras for HDR or mixed lighting; later migrated to MIPI

Robotaxis & Shuttles – Interior & Docking Vision

Cabin monitoring, HMI interaction, door area and charging port alignment

UC-501 for interior modules; OV9281 for fast docking camera views

Autonomous Yard Trucks & Terminal Tractors

Trailer connection alignment, rear view assistance, safety zones

Starvis cameras for night-time operations in ports and terminals

Autonomous Parking & Valet Systems

Compact cameras in bumpers, mirrors and pillars for environment sensing

UC-501 for quick prototyping with Jetson-based parking controllers

Excavators, Loaders and Bulldozers

Blind-spot cameras, bucket or blade view, safety perimeter

Starvis USB in R&D vehicles; OV9281 for high-motion positions

Cranes and Hook Cameras

Hook-mounted cameras for lifting guidance and alignment

Global shutter to avoid distortion during swinging motion

Mining Haul Trucks and Underground Vehicles

Low-light, dust and vibration; situational awareness for assisted or autonomous modes

Starlight sensors are particularly valuable here

Wind Turbine Inspection Robots & Drones

Close-range blade inspection, tower structure, cable routing

OV9281 for moving platforms; Starvis for dawn/dusk wind-farm conditions

Solar Farm Inspection & O&M Robots

Panel surface inspection, crack or soiling detection, connector checks

UC-501 mounted on inspection carts or rail robots

Pipeline, Tank and Industrial Asset Inspection

Crawlers inside pipes, magnetic robots on tank walls, remote visual inspection

Compact UC-501 form factor is ideal for confined spaces

Power Substation & Grid Patrol Robots

Read analog gauges, indicator lights, breaker positions

Starvis USB for mixed outdoor/indoor environments and night patrols

Data Center Inspection & Facility Robots

Check cabinet lights, cable states, door positions, leak detection

UC-501 or Starvis USB on small robots that navigate narrow aisles

AI-Based Cooling and Environmental Monitoring

Cameras observe airflow indicators, curtains, racks and tiles

USB cameras used with edge AI boxes to feed digital twins

Hospital Logistics & Service Robots

Deliver medicine, samples and supplies through corridors and elevators

Starvis cameras for low-light wards; compact USB cameras in small housings

Disinfection and Cleaning Robots

Navigation in reflective and low-light environments; monitoring of target zones

UC-501 in compact chassis; global shutter if the robot moves quickly

Rehabilitation & Training Devices

Capturing limb trajectories, motion quality and exercise compliance

OV9281 global shutter to avoid blur in fast rehabilitation movements

Hotel and Office Delivery Robots

Lobby and corridor navigation, HMI at the front face of the robot

UC-501 as a front-mounted interaction camera, plus a navigation camera

Autonomous Stores & Smart Shelves

Shelf state, out-of-stock detection, planogram compliance

UC-501 hidden in shelf edges or ceiling bars; USB simplifies maintenance

Retail Kiosks and Smart Vending Machines running YOLOv8 or MediaPipe inference models

User interaction, anti-fraud, product verification

Micro USB cameras integrate cleanly into bezels and panels

Self-Checkout and Loss Prevention Systems

Overhead or side-mounted cameras to track items and bags

Global shutter modules for fast belt motion; Starvis for low-light stores

Security Patrol Robots

Indoor malls, campuses, parking garages

Starvis cameras for low-light routes; UC-501 in compact domes

Campus and Industrial Site Monitoring

Embedded cameras on small robots to cover blind spots traditional CCTV misses

USB cameras used in early deployments to test routes and camera placement

Across all these domains, the pattern is clear:

Whenever Physical AI leaves the lab and meets the real world, you need at least one small, reliable edge camera to “see” what’s going on.

In practice, engineering teams rarely start from a perfect architecture. A typical pattern looks like this:

Start with UC-501 or a Starvis USB camera on a Jetson / RK3588 dev kit

Prove that the model can detect objects, people, pallets, components or defects.

Mount the camera directly on the robot / machine

Tape it to the bumper, embed it in a prototype housing, or screw it to a bracket.

Record real-world data

Use GStreamer, OpenCV or ROS2 nodes to log image streams alongside IMU, lidar or joint telemetry.

Train and refine the perception model

Improve detection under low light, vibration, reflections, motion blur.

Profile latency and thermal budgets

Decide whether the final design needs MIPI or can keep USB in production.

Migrate to production hardware while keeping USB cameras for testing rigs

Use OV9281 or Starvis MIPI on the product, while UC-501 USB remains the lab workhorse.

Because they are cheap, flexible and reusable, USB cameras are both:

The first camera to see the world for your Physical AI, and

The last camera to leave your lab when the product ships.

A simple decision framework:

Choose Goobuy UC-501 micro USB camera when:

Space is extremely tight

You want quick PoC on USB/UVC

Motion is moderate and rolling-shutter blur is acceptable

You care about flexibility and mounting options

Choose OV9281 global shutter ISB camera when:

Your scene or platform is moving fast

You need accurate geometry under motion (robot arms, conveyors, cranes)

You must avoid rolling-shutter distortion (e.g., vertical lines, fast edges)

Choose Starvis USB camera module when:

You operate in low-light or HDR environments

Night-shift warehouses, tunnels, outdoor yards or dim corridors are in scope

You want to push Physical AI beyond well-lit labs into real-world darkness

NVIDIA’s ecosystem around Physical AI – from robotics foundation models and Isaac simulation to edge platforms like Jetson – is rapidly maturing. But every deployment, in every industry listed above, still depends on one simple fact:

An autonomous system cannot reason about what it cannot see.

For the next wave of Physical AI projects, a USB camera for edge AI and Physical AI vision is often the most pragmatic way to give machines that first sight of the physical world.

UC-501 micro USB puts a full vision module into spaces where traditional cameras cannot fit.

OV9281 global shutter makes fast motion and precise geometry usable for robotics control.

Starvis USB brings starlight sensitivity to warehouses, factories, energy sites and hospitals.

If you are designing robots, inspection systems, smart retail devices or autonomous infrastructure for the 2026–2029 Physical AI era, your perception stack is not a detail – it is your foundation.

And that foundation almost always starts with a camera.

Because the highest friction in Physical AI is not compute, but perception prototyping. Teams need to validate what to see, where to mount sensors, how models behave under real-world lighting, vibration, motion and occlusion. USB provides the fastest loop of:

mount → stream → capture → train → tune → iterate

Once validated, many production systems migrate to MIPI/GMSL — but USB almost always owns the first 6–18 months of perception R&D.

Both occur. In high-volume autonomous systems (AMRs, forklifts, mining, robotaxis), final cameras often migrate to MIPI/GMSL due to thermal + EMI + cable routing.

But in:✔ kiosks✔ medical devices✔ smart retail terminals✔ facility robots✔ data center inspection✔ hospitality robots✔ digital signage AI boxes

USB remains in final production BOM, because: UVC = no custom drivers/shorter validation cycles/easier field replacement

lower total lifetime cost/faster RMA & maintenance/zero kernel integration risk

Physical AI breaks into a four-layer autonomy stack:Sensors → Perception → Planning → Actuation

The stack cannot function without grounding. USB cameras are the easiest way to provide grounding during the deployment & scale-up phase because they plug directly into Jetson/RK3588/IPC nodes that already run perception models.

Real-world perception fails for reasons synthetic simulation rarely predicts:

low light / HDR / glare/motion blur/vibration coupling/reflections & specular highlights/wrong lens FOV/ins